- The Column-09-21-2018

- Volume 14

- Issue 9

Are You Producing Data or Information?

Can we see the wood for the trees?

Can we see the wood for the trees?

We spend most of our working lives producing data, both qualitative and quantitative. It is our primary output and the reason we are employed. My simple question is: Do we focus on the data rather than the information that the data might represent?

Data systems can generate a “result” if we input sample and standard weights, dilution factors, concentrations of standard solutions. We can report the qualitative output without a lot of thought-yes, it’s there or no it’s not. The mass spectrometry (MS) library search tells us that its compound X, or its accurate mass is Y, or its empirical formula is such and such. But what does this actually mean to our clients (either internal or external)? As we industrialize the science and push for higher efficiencies and greater productivity, do we lose the ability to generate information rather than data? To me these are two very different things.

The ability to produce information that guides a project, aids in troubleshooting a process, or allows properly informed commercial decisions within the business is often much more valuable than a raw number, a list of compounds, or an accurate mass and empirical formula. All too often we work as disparate functions; the analytical laboratory is a satellite (physically or metaphorically) that serves many masters and exists to pass or fail, confirm or deny, and provide the data they have requested. Sometimes in the laboratory we might not even know who “they” are, “they” may never venture into the analytical space. This isn’t right, it isn’t how it should be.

I wonder how many clients actually know what information we are capable of producing, how many of them really understand our techniques in order to make the right analytical “requests”? Do they know which questions to ask?

I believe there are two aspects that need to be developed to properly function as a key provider of information:

- To allow users of the service to better understand our capabilities and empower them to ask the right questions of us and to better understand our outputs;

- For analytical chemists to strive to understand more of the context of their work, the background to the analysis, the chemistry involved, and the place of the results in this wider context.

In respect of point number 1, I remember a time when a great deal of emphasis was placed on educating analytical service users on the background to our techniques and the capabilities of our instrumentation. There were many training sessions on the use of data from the analytical laboratory and the inferences that could or could not be drawn from data produced from the various different techniques. I see precious little of this happening in the modern analytical laboratory. In fact, the fashion now seems to be that when things have gone wrong or unusual data are produced the laboratory manager or team leader is summoned to the project meeting. I wonder, do they know enough about the analysis to draw proper inference from the data and provide a guiding hand to the project (whatever this may be)? Sometimes I wonder if the presence of the analytical chemist who is the subject matter expert or has carried out the analysis would be a lot more informative!

Often the recipients of the data are unaware of the limitations, unable to properly interpret the numbers or the facts, not aware of the level of error or uncertainty which accompanies the data. I am certain wild decisions are made from data, which are wholly unsupported by the analytical output. There is a worrying disconnect.

I believe we are a little scared of point number 2. In discussions on this topic I hear responses such as “It’s not our place to make that decision”, “it’s a project decision”, “that’s not something we can comment on”, “we aren’t in a position to make comments on why this has occurred”. My simple counter question to these statements is “why not?” Do we not want to be the ones who take the blame if our advice is incorrect? Do we not have the knowledge to give advice on what might be done to overcome the issues that we may have highlighted? Do we not have time to get involved?

In truth the answer is probably a little of all of the above. We often don’t have time to get involved in every project that needs a little more commentary on our data. There will be many cases where we don’t have the breadth of knowledge for us to properly interpret our data in the wider context. And yes, why should we stick our necks out and be the ones to take the rap if our judgement is awry? Analytical science used to be much more collaborative; we used to get involved in meetings with our service users and help them to understand what can and, crucially, cannot be inferred from our data. Why has this changed so much? I believe it is, once again, down to industrialization, where in many cases we are simply producers of data, both qualitative and quantitative. This is in some ways self-inflicted and we have undertaken this role too lightly, after all, it’s much easier to be insular, much more straightforward to make sure that, according to our own processes and procedures, our data are of excellent quality. But beware the lack of the wider context, of not seeing the wood for the trees.

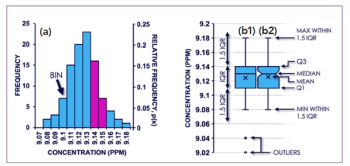

If we have a specification or expected range of values for this number, then we may have a feel for the validity of the data, however, if the analysis is investigational or if no expected range exists, then do we ever question the number that is produced?

We are very concerned about the system suitability and quality control (QC) data hitting their specification targets and can often spend hours tabulating the data and ensuring that we are “compliant” and that the system is producing “fit-for-purpose” data. But does this mean that our data are “correct” in a contextual sense?

QC laboratory methods are very often undertaken without any idea of the analyte structure or the physical chemical properties of the chemical nature of the matrix. It’s perfectly possible to produce “fit-for-purpose” data (from the checks and balances constituted by QC and system suitability), but knowing the context must surely help with troubleshooting or interrogating the data for abnormalities not seen in individual or smaller numbers of data points. A good point to consider here is the out of specification (OOS) result. What happens in your laboratory when an OOS event occurs? Sometimes this will be reported as a failed batch and a re-test will occur, after which a decision will be made, usually according to an investigation protocol. But how much investigation is undertaken outside the confines of the protocol? What can be learned about the manufacturing process, the synthetic route, or the pilot plant production method in light of the failed sample?

And how much is automation to blame in our lack of context? We often use templates to generate written reports and may populate tabulated data using information electronically transferred from the data system. In theory we may place the sample on one end of the system and the results are reported to us at the other. How much does this allow us to understand the nature of the failed results?

Studies with airline pilots show that whilst automation allows them time to think ahead and consider the wider context of the flight, any problems or struggles with automation makes the pilots turn inward and concentrate much more on the tasks at hand and become more obsessed with solving these perhaps minor issues-often to the detriment of the big picture. Given the higher levels of automation in the laboratory-is there anything we can learn from this?

Of course, by no means is every laboratory guilty of being so insular, but I do see a general trend in which we are much more concerned that our data are “correct” without considering what information that may represent to the service user. Denis Waitley, the American author and motivational speaker, wrote, “You must look within for value, but must look beyond for perspective.” I think we can benefit greatly from bearing this in mind in our daily work.

Contact author: Incognito

E-mail:

Articles in this issue

over 7 years ago

Waters and Restek Announce Co-Marketing Agreementover 7 years ago

Retroactive Toxicology Study on Adolescents Using GC–MSover 7 years ago

Py-GC–MS Analysis of Japanese Jõmon Period Lacquerwareover 7 years ago

Shimadzu Receive NRW.Invest Awardover 7 years ago

Automated Multicolumn Purification of a Histidine-Tagged Proteinover 7 years ago

Vol 14 No 9 The Column September 2018 North American PDFNewsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.