E-Separation Solutions

- E-Separation Solutions-11-24-2009

- Volume 0

- Issue 0

Data Handling/Software

Participants in this Technology Forum are Linda Doherty of Agilent Technologies, Tom Jupille of LC Resources, and Barry Coope of Thermo Fisher Scientific.

The ever-increasing volume of data generated in chromatography laboratories drives the development of sophisticated software for data handling and analysis. Participants in this Technology Forum are Linda Doherty of Agilent Technologies, Tom Jupille of LC Resources, and Barry Coope of Thermo Fisher Scientific.

What are the current trends in software for the chromatography laboratory?

Doherty:Customers are moving to distributed solutions from isolated workstations. This trend is being driven by the need to standardize laboratory computing. Over the years, the laboratory IT infrastructure has been given corporate exceptions to run as an isolated environment. Larger companies are leading the trend toward a partnership between IT and lab management to have secure, robust computing solutions. Decisions on new software solutions are made based on the software’s capabilities and the ease of IT supportability. Other trends include consolidation of data from a variety of manufacturer’s into a single data repository so that the data can be recalled through metadata searches and reviewed.

Jupille:I think they parallel trends in the world at large: a continuing move toward “seamless” integration of the computer into our daily lives. In the lab, this means a blurring of the distinctions among instrument controller, data system, lab notebook, conferencing/meeting . . . Imagine the computer/“tricorder” in Star Trek.

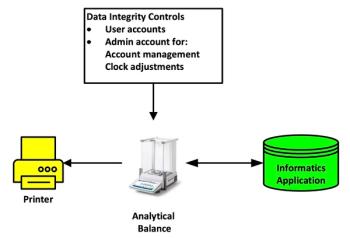

Coope:Integration between laboratory systems is more important than ever. To run efficiently, laboratories need to connect and automate their chromatography data to laboratory management systems, eliminating delays and manual transcription steps from their process.

What software applications for the analytical laboratory are undergoing the most rapid evolution?

Doherty:Electronic laboratory notebook (ELN) is the number one growth area. The need to verify and protect the medicinal chemist's work with the analytical experiments and results has made this a natural expansion of ELN into new laboratory environments. Content management and standardization of content (XML-based results and reporting) is probably the second fastest growing application. Companies are seeing an explosion in data and need more sophisticated ways to recall and review data in order to make solid decisions, quickly.

Jupille:I think it’s in programs that we don’t see: the control software that runs the instruments, monitors the pressure, operates the check valves, reprimes the pump, and so forth. Many functions that used to be the responsibility of the user are now carried out invisibly in the background. In effect, formerly valuable skills (how to prime a pump, for example) are now almost irrelevant. To stretch an analogy: how much do you have to know about the Hertz-Helmholz equations and klystron tube electronics to use a microwave oven? Chromatography systems are becoming much more “appliance-like” and therefore much more accessible and valuable to the nonexpert user.

Coope:ELN is playing an increasingly important role for laboratories. Interfacing to LIMS has always been important for CDS software, but people also want to interface to ELN. Linking their sample preparation steps to the experimental record results.

What are the greatest difficulties encountered in developing new software for chromatographers?

Doherty:Make it easier to use, but don’t impact my workflows. Younger laboratory scientists are looking for user interfaces and experiences that are more like the social networks that are so common to them. Traditional softwares are complex and follow paradigms that many of these younger scientists have had no experience with. In contrast the more mature scientists would like easier to use solutions, but potential workflow changes that may occur from enhancements to usability can impact overall lab productivity and may not be perceived, short term, as a benefit.

Jupille:The “That’s not the way we do it!” response by chromatographers. That runs counter to the trend that fewer and fewer users of chromatographs are chromatographers.

Coope:Instrument control support for other vendors has always been the greatest challenge. Laboratories wish to standardize their software, but keep instrument vendor flexibility. Vendors have recognized over the past few years, that our customers need us to work together.

What are some areas for potential new software development in the chromatography laboratory?

Doherty:The most important areas for development are well documented data models and the tools visualize, interpret and report on data today, but more importantly to have the ability to mine data in the future as new ideas are developed that will lead to new insights and discoveries.

Jupille:I'll focus on method development, which is my area of interest. I don't think this is new, but it’s certainly key: the continuing integration of programs for data search, instrument control, data acquisition, separation modeling, statistical interpretation, documentation, and information management into a seamless whole with a consistent user interface across different vendors’ hardware. To use (another) analogy, try to imagine what our lives would be like if you steered a Toyota with pedals, a Ford with a joystick, a Chevy with a steering wheel, and a Honda by leaning right or left. And if a Dodge had the accelerator pedal on the left and the brake on the right. The “conventional” control arrangement may stifle creativity, but it makes things easy for the majority of drivers (at least until we have to go from North America or Europe to the UK!). The same argument could be made for analytical instruments.

Coope:Supporting mass spectrometers as a chromatography detector is becoming more important. Today, mass spectrometry (MS) support is limited in chromatography software, but I believe multi-instrument MS support will be a growing trend in the next few years.

If you are interested in participating in any upcoming Technology Forums please contact Group Technical Editor

Articles in this issue

about 16 years ago

November Data Handling/Software E-Separation Solutions Newsletterabout 16 years ago

Integrating the Laboratory's Islands of AutomationNewsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.