- January/February 2026

- Volume 3

- Issue 1

- Pages: 12–14

Is My Calibration Model Reliable? Part I: Validating Calibration Curves in Size-Exclusion Chromatography

Key Takeaways

- SEC calibration links elution volume/time to polymer hydrodynamic volume using standards, requiring model-form selection that preserves predictive molecular-weight distribution fidelity.

- Least-squares regression minimizes summed squared residuals, and R² quantifies explained variance but systematically favors higher-parameter polynomials regardless of true signal.

Bob Pirok discusses the use of R², adjusted R², and F-tests to pick the right SEC calibration curve polynomial to avoid overfitting.

From calibration to retention modelling, modelling relationships are a cornerstone of analytical separations. In future articles, we will learn about practical validation tools for models. In this article, we use the coefficient of determination, R², adjusted R², and the F-test to answer a frequently asked question from polymer analysts: “Do I need to use a third- or fifth-order polynomial for my size-exclusion chromatography (SEC) calibration?” These practical tools allow us to balance model complexity against statistical significance.

Whether we like it or not, chromatographers engage with models and calibration curves daily. Quantitative analysis hinges on accurate calibration of detector response, retention models shape how we design and predict separations, and calibration of elution volume versus molecular size is paramount to distil a reliable molecular-weight distribution using size-exclusion chromatography/gel permeation chromatography (SEC/GPC). These tools form the mathematical backbone of modern chromatography.

However, our models are only as good as the data and the mathematical form we choose to fit that data. Unsurprisingly, a question that I am frequently asked is “How do I know if my model is good enough?” This is a question that is also of huge importance to the implementation of artificial intelligence (AI) in chromatography. We will therefore devote attention to this topic by learning about validation tools in combination with different user cases. In the first installment of the series, we will start with the coefficient of determination (the well-known R2) and the so-called F-test of Significance by reviewing a question that many polymer analysts know well: Do we use a third-order or a fifth-order polynomial to calibrate our SEC separation?

Size-Exclusion Chromatography

In polymer analysis, a very common method for the determination of the molecular-weight distribution (MWD) is SEC. Larger molecules are partially excluded from the pores of the stationary phase and will elute earlierthansmaller components. As such, the elution time of the analyte is related to its size (strictly speaking, its hydrodynamic volume), and analysts therefore use standards to calibrate the elution time to molecular weight. For both practical and fundamental reasons, the analyst is typically required to choose between a third-order and a fifth-order polynomial. Validation is needed to ensure that the calibration model is reliable, neither underfitting the trend nor overfitting random noise.

Least Squares Regression

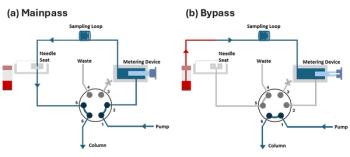

Least squares regression lies at the core of building any chromatographic model, as illustrated in Figure 1a. A mathematical function is fitted to the data in such a way that the differences between the measured values (y) and the model predictions (y ̂) are minimized (e=y-y ̂). To prevent positive and negative errors from cancelling each other out, each error is squared (e2) before summation, resulting in the familiar sum of squares, Σ(e2). Minimizing Σ(e2) is what defines the “least-squares” approach. The coefficient of determination, R2, expresses how much of the total variability (SStot)in the data is explained by that model (SSres), as is shown in equation 1, as well as Figures 1a and 1b

Figure 2a shows an example of data obtained from an SEC calibration process, where different certified polymer standards were injected. A third-order polynomial (green, solid line) and a fifth-order model (red-dashed line) are fitted through it. The coefficients of determination are 0.9988 and 0.9994 for the third and fifth order polynomial, respectively. This is not very meaningful, because the pitfall of the R2 is that it will always reward models with a larger number of parameters, as they can capture more variation in the data. The adjusted R2value corrects for this by first dividing the sum-of-squares by the number of degrees of freedom of the model.

The adjusted R2 is shown in Figure 2a to be 0.9981 for the third-order polynomial, and 0.9983 for the fifth-order polynomial. A fairer comparison indeed, but still not very informative.

The F-Test: Is a More Complex Model Significantly Better?

While R2 gives a goodness-of-fit measure and Ra2 a complexity-penalized variant, the F-test allows a more stringent statistical comparison between a simpler (reduced) model and a more complex (full) model. For our purposes, we pose questions like: Does a fifth-order polynomial fit the SEC data significantly better than a third-order polynomial? The F-test works by comparing the decrease in the residual sum of squares (RSS, the summed squared errors) achieved by the more complex model to the remaining unexplained variance. In other words, how much did the error reduce by adding the extra term(s), and is that reduction large relative to the inherent noise? We calculate an F statistic from:

This Fobs statistic can then be used in a hypothesis test with H0 (addition of the extra terms is not significant), and the alternative H1 (addition of the extra term is significant). If we now compare our third order (red) and fifth order (full) polynomial, we obtain a p-value of 4.2∙10-4, which is below an α of 0.05, strongly suggesting that the fifth order polynomial does capture meaningful variation in the data better than the third order polynomial.

Out of curiosity, we can also test whether a sixth-order polynomial would be better; however, the resulting p-value of 0.1969 indicates that including an additional term does not lead to a statistically significant improvement in the model’s fit to the data.

Conclusions

Model validation is an indispensable step in constructing calibration curves. High alone is not a guarantee of a good model; one must check for overfitting and lack-of-fit using tools like adjusted and the -test. In our SEC example, these tools save us from using an unnecessarily complicated approach. The end goal is a calibration that not only fits the observed data well but also predicts new values reliably. By critically evaluating , applying the -test, and examining residuals, we ensure our calibration models stand on solid statistical ground. In the next installment, we will extend this validation mindset to the perspective of the datapoints and investigate the concept of leverage, influence, and residual outliers.

References

(1) Pirok B. W. J.; Schoenmakers, P. J. Chapter 9: Data Analysis. Analytical Separation Science; Royal Society of Chemistry, 2025; pp. 611–756. DOI:10.1039/9781837674824

(2) Pirok, B. W. J.; Breuer, P.; Hoppe, S. J. M.; et al. Size-Exclusion Chromatography Using Core-shell Particles. J Chromatogr A 2017, 1486, 96–102. DOI: 10.1016/j.chroma.2016.12.015

Articles in this issue

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.