- September 2025

- Volume 2

- Issue 7

How to Meaningfully Describe and Display Analytical Data? A Dive Into Descriptive Statistics

Key Takeaways

- Data quality is critical for successful data processing, with robust statistics essential for handling outliers and ensuring accurate representation.

- The standard deviation is sensitive to outliers, making it non-robust, while the median absolute deviation (MAD) offers a more stable alternative.

Explore the importance of robust statistics like median and MAD in data analysis, ensuring accurate insights despite outliers and variability.

A famous statement in information sciences is “garbage in, garbage out," which serves as a reminder that the success of any data processing strategy hinges on data quality. There’s little point in constructing a calibration line for data that bears an unclear relationship between concentration and detector response, or performing robust peak detection or resolution enhancement on noisy signals with high degrees of coelution, and no point in feeding poor data into a machine learning model. One critical aspect of data quality is noise, which we often inspect using measures of variance. Typically, we consider the standard deviation, also known as precision. However, before adopting more sophisticated data processing or machine learning strategies, we must first understand how to characterize variance reliably. In this instalment, we revisit the robustness of the standard deviation, using both statistical concepts and visual tools.

In the previous installment of "Data Analysis Solutions," we considered the effect of replicates on confidence intervals. Repeated measurements yield descriptive statistics, such as measures of central tendency. The arithmetic mean (average) is the most common, but the median, which is the middle value, is often a better choice when robustness is needed.

To illustrate, consider the data set: 2.7, 3.0, 3.3, 3.4, 3.4, 3.7, and 10.2. Here, the mean is = 4.2, while the median is = 3.4. The median is robust, unaffected by the outlier 10.2, while the mean is heavily distorted, no longer representing the bulk of the data. Robust statistics, such as the median, help guard against misleading conclusions caused by outliers.

Robustness is especially important when working with real data, where errors, outliers, or unexpected variability are common. When preparing data for calibration models, machine learning pipelines, or exploratory analysis, using robust statistics helps ensure we are characterizing the true behavior of our data, rather than being misled by a handful of unusual observations.

Measuring Variance: Standard Deviation and Its Limits

Just as we want to know the center of the data, we also want to understand how spread out the data are. This brings us to variance measurements, which quantify variability. The most familiar is the standard deviation (σ or ς), which describes how tightly values cluster around the mean.

The standard deviation works well for normally distributed data, where values symmetrically spread around the mean. However, like the mean, the standard deviation is sensitive to outliers; a single extreme value can inflate it dramatically. As a result, it too is classified as non-robust.

From Numbers to Visuals

Summary statistics tell part of the story, but visual tools provide immediate insight into data structure, outliers, and spread. Students struggling with developing a data analysis strategy in my chemometrics classes will therefore always hear my recommendation to first plot the data. This was also the philosophy of Florence Nightingale (1820–1910), a pioneering nurse and statistician who famously used innovative pie charts to display data on seasonal variations in patient mortality and convey her findings during the Crimean War.

Let us imagine that we took the previous installment very seriously and decided to conduct 100 replicates of a measurement. Table I shows the 100 measurements. We also see a column dividing the measurements into different sets, each with a mean and standard deviation, but we will ignore those for now.

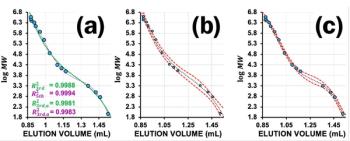

The data are visualized in Figure 1. The histogram in Figure 1a gives us an initial sense of the distribution of these 100 measurements. The box plots in Figure 1b then offer a more detailed view, showing not only the median and interquartile range (IQR), but also the spread of typical values and the presence of any outliers.

As discussed, the standard deviation (shown in Table I) is the most familiar way to quantify the spread of data. However, it is sensitive to outliers, which can inflate the calculated variance, sometimes dramatically. This is because the standard deviation depends directly on the mean, which is itself non-robust.

Introducing Median Absolute Deviation—A More Robust Alternative

A more robust alternative for quantifying spread is the median absolute deviation (MAD). Rather than measuring deviation from the mean, MAD measures the typical distance of each value from the median, making it far less sensitive to outliers. The formula for MAD is:

Since the median is resistant to outliers, so is MAD. It provides a stable measure of spread, even when a handful of rogue measurements appear. If we calculate both the standard deviation and MAD for sets of 100 replicates, we would likely see the standard deviation fluctuate more if outliers were present, while the MAD would remain relatively stable.

Why is This Relevant for Machine Learning and Signal Processing in Chromatography?

This distinction between robust and non-robust statistics is not just an academic curiosity. In chromatographic signal processing, measures of central tendency and variance are often used to normalize or scale data, filter background, detect peaks, and feed data into machine learning tools. If these preprocessing steps rely on non-robust statistics, the entire data pipeline can become skewed by a small number of unusual points. In a future installment, we will see that many outlier detection tools rely on similar assumptions, and that their use is not without risk.

Standard Deviation for Your Sample

One final observation can be drawn from Table I. The right-most column shows the standard deviation for each row, calculated from 10 repeated measurements. Despite the consistent number of replicates, the standard deviations vary considerably. This highlights a key point from the previous instalment: fewer replicates lead to greater uncertainty about how well the sample represents the population (2). This also explains why a standard deviation based on just two or three points is rarely meaningful.

References

- Pirok, B. W. J.; Schoenmakers, P. J. Analytical Separation Science; Royal Society of Chemistry, 2025.

- Pirok, B. W. J. How Many Repetitions Do I Need? Caught Between Sound Statistics and Chromatographic Practice. LCGC International 2025, 2 (3) 28–29. DOI: 10.56530/lcgc.int.ck5885t8

Articles in this issue

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.