The Answer’s AI. What’s the Question?

Key Takeaways

- AI/ML can improve HPLC peak integration by automating processes, but requires high-quality chromatographic data for effectiveness.

- Regulatory guidance on AI/ML in biopharma is inconsistent, with FDA and EMA offering differing frameworks and definitions.

Does AI/ML help to enhance chromatographic peak integration? What do regulators say? Are you being seduced by technology?

Biopharmaceutical analysis involves the development, validation, transfer, and operation of high performance liquid chromatography (HPLC) methods for the separation of complex mixtures with broad peaks. Does artificial intelligence/machine learning (AI/ML) help to enhance chromatographic peak integration? What do regulators say? Are you being seduced by technology?

Artificial intelligence/machine learning (AI/ML) is the hot topic throughout biopharmaceutical and pharmaceutical industries to aid or replace human input. Much hype surrounds AI/ML that can lead to seduction by technology—hence the article title. Use of AI within a regulated organization should follow AI governance policies and procedures within the Pharmaceutical Quality System (PQS). Please see Mintanciyan et al. (1).

AI refers to a machine-based system and ML refers to a set of techniques that can be used to train AI algorithms (2) so ML should not be considered outside the scope of AI.

Biopharma HPLC Analysis and AI/ML

High performance liquid chromatography (HPLC) separation of macromolecules uses reversed phase, affinity, size exclusion, or ion-exchange columns with a variety of detectors for identity, purity, and stability testing. Typically, eluting peaks are broad, which may result in complex chromatograms, with partial separation requiring manual integration, resulting in increased regulatory scrutiny (3–5). Manual integration is slow, subjective, and can delay the product release. Automated integration is the preferred option and we will look at how this could be enhanced by ML.

ICH Q2(R2) (6) and Q14 (7) on analytical procedure life cycle were reviewed by Burgess and McDowall (8), and Figure 1 is based on the more integrated approach in USP <1220> (9) to which we have added areas of potential AI/ML implementation.

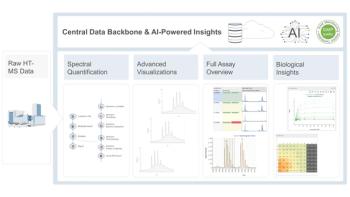

Using ML models within chromatography data systems (CDS) can be considered from both supplier and user levels. In this article, our aim is to provide a practical approach to see where ML can improve peak integration within a CDS. This article is conceptual and does not provide specific examples. A more detailed overview of AI/ML in chromatography is provided in reference 10. The prime requirement for AI is high-quality source chromatographic data; no amount of AI input can resurrect poor chromatography.

What about the regulators? Is AI/ML just another computerized system or are additional controls required? Is AI under control or is it the Wild West? The impact of draft Annex 22 regulation (11) and FDA draft guidance on AI in regulatory decision-making (12) will be reviewed. For validation of AI/ML, the book by Lopez (13), publication from BioPhorum (14), and GAMP 5 are recommended (15).

An experienced chromatographer should review all outputs from a CDS regardless if AI is used or not; this allows for manual intervention where integration parameters could be modified but baselines remain unchanged (3).

Method Development

Manual method development is a slow and iterative process if a robust and reliable analytical procedure is required; even pharmacopeial “methods” are merely starting points for the process. As shown in Figure 1, USP <1220> starts with defining the analytical target profile (ATP) and developing the procedure to meet it. The key to more automated peak integration is good method development to separate peaks, identify critical control parameters, and set integration parameters for procedure validation and operational deployment. Areas where peak integration could be improved by ML in stage 1 are:

- Baseline detection and correction;

- Peak detection in complex profiles;

- Automated peak deconvolution;

- Adaptive integration rules;

- Real-time feedback and anomaly detection.

These potential improvements would lead to consistency between analysts and laboratories that can be verified during method validation and method transfer, respectively.

Experimental design has been available for chromatographic separations since the 1980s, with several commercial applications available that can automate the process. Improved chemometric approaches to automated method development were publishedby Bos et al. (16), and Marchetto et al. (17) have developed an in-silico ML system for predicting HPLC method parameters or optimizing existing LC methods. A neural network ML has been developed by Satwekar et al. (18) to predict analytical variability for peak integration. It remains to be seen if these approaches are integrated within a commercial CDS.

As Figure 1 indicates, the output from method development must be identification of critical control parameters, understanding of their impact on the method, along with training an ML algorithm with automatic peak integration parameters. A potential monitoring metric for routine use should be defined at this stage, for example, automatic peak integration > 90%.

Procedure Performance Qualification and Verification

Peak integration parameters will be verified within stage 2 (method validation). This will permit the first evaluation of the success of method development for each approved plan, define automatic peak integration metrics, and also demonstrate how AI/ML is helping to improve integration. The first peak integration metrics can be generated here as a baseline for routine use. Integration parameters should be fixed at this point to ensure faster processing post run.

When the method is used routinely (stage 3), the metrics generated by the CDS should indicate how each analytical run meets the objective to reduce manual peak integration. Metrics should be monitored routinely and plotted (stage 3). If manual integration starts to increase, consideration should be given to updating the method and the AI/ML integration, as shown in Figure 1.

While this is an ideal situation that could be developed in-house but more likely by a CDS supplier, what is the impact and risk of using AI/ML in a regulated biopharmaceutical laboratory?

What Do the Regulators Say?

Regulations should provide clarity for how to use AI/ML. Draft Annex 22 regulation (11), an EMA reflection paper on AI (19), and a draft guidance on AI in regulatory decision-making (12) have been published by the EMA and FDA, respectively.

- Annex 22 (A22) (11)is for GMP-critical AI/ML computerized systems that can predict or classify data. It also considers static AI/ML with deterministic output where models are trained by datarather than being programmed, but even a frozen (static) AI does not provide a deterministic output, therefore definition of a deterministic AI should be provided in this document. Section 1 discourages the use of generative AI (gen-AI), large language models (LLM), and models with a probabilistic output. Section 5.6 on test data repeats this point stating …by means of generative AI…any use…should be fully justified. The glossary provides definition of deep learning but omits gen-AI and LLM.

- FDA draft Considerations for the Use of AI to Support Regulatory Decision-Making for Drug and Biological Products (12) (FDA-AI)discusses a context of use (COU) and credibility assessment framework of an AI model to be used in drug product life cycle from nonclinical to post-marketing (footnote 10). In contrast to A22, FDA-AI appears to allow any AI model but suggests liaison with the Agency (Figure 2). Unsupervised learning methodology is considered in line 379, which raises the question of whether dynamic ML is within the scope of FDA guidance or not (15)? Along with the definitions adapted from other AI documents (20–22), the Agency also hosts an AI glossary (23).

- EMA Reflection paper on the use of AI in the medicinal product life cycle (19)(EMA-AI) outlines AI/ML use in the life cycle of medicinal products: for example, drug discovery, manufacturing, (including …in-process quality control and batch release). This document is focused on all models developed through the process of ML… (19).

The AI definitions in these documents describe output of a trained model either as predictions, content recommendations, or decisions. The vagueness of these definitions does not permit if gen-AI and LLM fall in or out of the scope of each document. AI governance is not mentioned either by FDA-AI or A22, which are omissions. Following the proposed revision of EU GMP Chapter 4 on Documentation (11), we can infer that A22 should be tied to this draft; however, there is a missed opportunity to include any mention of AI/ML in the more recent proposed update of Chapter 1 on PQS (24).

Our discussion will compare A22 and FDA-AI and will expand the points to include EMA-AI and other relevant sources, where appropriate. AI ethical and FAIR (findable, accessible, interoperable, and reusable) data principles are out of scope of this article.

Regulations and Guidance Moving in Different Directions?

A22 contains one clause on risk management that provides a traditional description. Particular risks of AI systems like bias mitigation, transparency, auditability, and traceability must be considered (13,14). From an FDA standpoint, assessing the model risk consists of two elements: model influence and decision consequence, which is embedded into a credibility assessment framework.

As shown in Figure 2, FDA-AI framework comprises a 7-step process aiming to assess a model output through collecting credibility evidence (12).

Similarly, EMA-AI (2.5) (19) and GAMP 5 SE (31.3.1) (15) follow a life cycle for AI/ML and the latter states …many aspects of the traditional computerized-system life cycle and compliance/validation approach are fully applicable to AI/ML (15).

Although there is some overlap between the content of A22 and the above sources, A22 does not present a framework and covers the intended use and testing principles. One of the important missing steps in A22 is the need for regulatory engagement, as discussed earlier. Depending on the impact of the AI/ML on regulatory decisions, there is a dedicated section in EMA-AI (2.4) covering regulatory interactions (19). .

Intended Use (IU) and Context of Use (COU)

FDA-AI uses the terms fit for use and fit for purpose interchangeably (footnote 17), whichis applicable to both model development (lines 88–89)and testing data (line 412). From the FDA standpoint, it contains two key factors that data should be:

- Relevant, for example, sufficient numbers of data are required;

- Reliable, for example, data accuracy, completeness, and traceability.

The concept of IU is connected to COU, which the FDA gives details of in steps 1 and 2 (12). To define COU in the role and scope of AI (step 2), you need to first describe the question, decision, or concern that you want to address using the model (step 1). GAMP 5SE in sections 31.3.1 and 31.3.2 provides guidance in specifying the business need or opportunity within the concept phase (15).

In contrast, COU is not discussed by A22. Section 3 describes IU and section 5 discusses size sufficiency of test data set. The definition of IU needs to be added into the A22 glossary.

Clauses 3.3 and 10.5 of A22 (human in the loop [HITL]) state that if model output impacts operator decision, the IU needs to define the level of human interaction (11). HITL is also discussed by FDA-AI under step 2, which entails …COU should also include a statement on whether other information …will be used in conjunction with the model output to answer the question of interest (12).

Data Sets

Table I presents an overview of the data sets that are used for ML models; a tick symbol merely indicates the concept inclusion, as descriptions vary across the sources. For example, there is consistency in utilizing test data that is conveyed by different phrases: before…deployment(19), after training (12),and final ML model (11).

What’s Missing?

FDA-AI introduces a term of development data sets containing training and tuning data, but the latter is omitted in A22.

Caution should be exercised when discussing validation, as it can be interpreted differently. FDA does not use validation terminology and states…the AI and ML communities sometimes use the term validation to refer to the tuning data…(footnote 29)(12). Section 2.5.2 of the EMA-AIreiterates the above point (19). However, to stay consistent with Annex 11, (11) and Annex 15 (25) regulations, precise definitions and comprehensive requirements need to be agreed upon.The book by Lopez provides a description of the ML life cycle, comprehensive definitions, extensive references, and a practical approach to ML validation (13).. A BioPhorum publication combines industrial and data scientist approaches and gives an overarching explanation encompassing computerized system validation with an AI sub-system (14).

Key concepts of the comparison of FDA-AI and A22 are shown in Figure 2 and are discussed here:

- Any ML model must reliably, accurately, and traceably produce outputs that will comply with the relevant predicate rule(13). The strongest focus of A22 is on verification and validation data, but key questions on how to train the models and how to secure their meaningfulness are missing.

- The principles of ALCOA++ (attributable, legible, contemporaneous, original, accurate, complete, consistent, enduring, available, and traceable) are embedded indirectly in A22. However, both A22 and FDA-AI are inadequate to discuss the criteria of high-quality data sets that avoid items such as reduction of accuracy, overfitting to noise, and bias.

- Post-deployment data and model drift mentioned by FDA-AI(12) and A22 5.5(11) on test data exclusion are good examples to highlight the requirement for good data quality to train models.

- Independency and splitting of test data set from training data is discussed in A22 section 6 and FDA-AI step 4. A22 considers independency of validation data set from other data (11).

- More detail in both FDA-AI and A22 is required to incorporate the use of cross-validation or bootstrapping (generating separate training and validation sets from the training or validation set [20]).

- Re-use of test data is inconsistent between documents:

A22 section 6 allows the reuse of test data but this needs to be documented with the justification (11).

GAMP 5SE (31.3.1) … understanding needs to be known as to which data can be used multiple times... (15).

EMA-AI (2.5.2) … current test data set cannot be used if test performance is unsatisfactory (19). - Availability of an assessment report during an inspection is considered by FDA-AI (step 6), but A22 does not mention this. FDA approach makes life easier for the inspectors.

- Definitions are not complete and consistent between A22 and FDA. In addition, deviation within an AI context requires definition. The meaning of key ML components, such as parameters and hyper parameters, are missing in A22; for more detail, see Lopez. (13).

Summary

We have discussed the potential of AI/ML implementation to improve CDS peak integration throughout analytical procedure life cycle. We have compared recent draft regulatory documents A22 and FDA-AI and observed that they are not harmonized, which causes problems when implementing AI/ML. The fundamental disconnects are incomplete and inconsistent AI/ML nomenclature and definitions, which should be the primary focus of regulators; FDA recommends Agency interaction, whereas EU GMP does not. With the current gaps within the regulations, we believe using AI-based peak integration is still an open question.

Acknowledgments

We thank, in alphabetical order, Dave Abramowitz, Bob Iser, Orlando Lopez, Yves Samson, and Daniel Wolf for their input and review comments during the writing of this article.

References

(1) Mintanciyan, A.; Budihandjo, R.; English, J.; et al. Artificial Intelligence Governance in GXP Environments. Pharm. Tech. 2024, 44 (4), 62–67.

(2) Executive Order 14110 Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, 2023.

(3) McDowall, R. D. Where Can I Draw The Line? LCGC Europe 2015, 28 (6), 336–342.

(4) Longden, H.; McDowall, R. D. Can We Continue to Draw the Line? LCGC Europe 2019, 21 (12), 641–651.

(5) McDowall, R. D. Ingenious Ways to Manipulate Peak Integration? LCGC International 2024, 1 (2), 20–26.

(6) ICH, Q2(R2) Validation of Analytical Procedures, Step 4 Final. International Council on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. Geneva, 2023.

(7) ICH, Q14 Analytical Procedure Development. Step 4 final. International Council on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. Geneva, 2023.

(8) Burgess, C.; McDowall, R. D. Quo Vadis Analytical Procedure Development and Validation? Spectroscopy 2022, 37 (9), 8–14.

(9) USP, USP General Chapter <1220>, “Analytical Procedure Lifecycle,” United States Pharmacopoeia Convention Inc: Rockville, 2022.

(10) Chasse, J. Artificial Intelligence in Chromatography: Advancing Method Development and Data Interpretation. LCGC International, 2025.

(11) EMA, Stakeholders’ Consultation on EudraLex Volume 4 - Good Manufacturing Practice Guidelines: Chapter 4, Annex 11 and New Annex 22. (European Medicines Agency, 2025).

(12) FDA, Draft Guidance for Industry Considerations for the Use of Artificial Intelligence to Support Regulatory Decision-Making for Drug and Biological Products (FDA, Silver Spring, MD, 2025).

(13) Lopez, O. Machine Learning Lifecycle and Its Validation; Author Publications, 2025.

(14) AI Validation: Implementing AI Systems in Regulated Pharma Environments. BioPhorum: London, 2025.

(15) GAMP, GAMP 5: A Risk-Based Approach to Compliant GxP Computerized Systems (Second Edition); International Society of Pharmaceutical Engineering, 2022.

(16) Bos, T.S.; Boelrijk, J.; Molenaar, S. R. A.; et al. Chemometric Strategies for Fully Automated Interpretive Method Development in Liquid Chromatography. Anal. Chem. 2022, 94 (46), 16060–16068. DOI:

(17) Marchetto, A.; Tirapelle, M.; Mazzei, L.; Sorensen, E.; Besenhard. M. O. In Silico High-Performance Liquid Chromatography Method Development via Machine Learning. Anal. Chem. 2025, 97 (13), 6991–7001. DOI:

(18) Satwekar, A.; Panda, A.; Nandula, P.; et al. Digital by Design Approach to Develop a Universal Deep Learning AI Architecture for Automatic Chromatographic Peak Integration. Biotechnol Bioeng. 2023, 120, 1822–1843. DOI:

(19) EMA, Reflection Paper on the Use of Artificial Intelligence (AI) in the Medicinal Product Lifecycle European Medicines Agency, 2024.

(20) ISQ, ISO/IEC 22989:2022 Information technology — Artificial intelligence — Artificial intelligence concepts and terminology (International Standards Organisation, 2022).

(21) ISO, ISO/IEC 23053:2022 Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML) (International Standards Organisation, 2022).

(22) IMDRF, IMDRF Machine Learning-enabled Medical Devices: Key Terms and Definitions (International Medical Device Regulators Forum: 2022).

(23) FDA,FDA Digital Health and Artificial Intelligence Glossary – Educational Resource.

(24) EMA, Stakeholders’ Consultation on EudraLex Volume 4 - Good Manufacturing Practice Guidelines: Chapter 1 (European Medicines Agency, 2025).

(25) EudraLex, Volume 4 Good Manufacturing Practice (GMP) Guidelines, Annex 15 Qualification and Validation (European Commission, 2015).

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.