- LCGC Europe-12-01-2009

- Volume 22

- Issue 11

Response Surface Designs (Part 2) — Data Analysis and Multiresponse Optimization

Part two of the column on response surface designs looks at data analysis and various approaches to simultaneously optimize multiple responses

As discussed in Part 1, in the May issue of LC GC Europe, during method optimization, the factors that influence the considered response(s) most — which are often found from a screening step — are often subsequently examined using response surface designs to determine the optimal experimental conditions. The latter is done by modelling the response(s) as a function of the investigated factors. In Part 2 the data analysis of the response surface design results and various approaches to simultaneously optimize multiple responses are discussed.

Method development is often split into a screening and an optimization step.1,2 During the screening, so-called screening designs are applied.3,4 During the optimization, response surface designs are often applied to determine the optimal experimental conditions.1,2

In the previous column (Response Surface Designs, Part 1),2 detailed attention was given to the types and properties of frequently applied response surface designs. In this column, the analysis of the response surface design results and some approaches to simultaneously optimize multiple responses will be discussed.

Data Analysis

The response surface design results are analysed by building, interpreting and validating a model describing the relationship between the response(s) and the considered factors. Usually, a second-order polynomial model is built (see Equations 1 and 2). In this section, the model building, the determination of the optimum for one response and the model validation are discussed.

Model building. Because only two or three important factors are usually optimized in a response surface design, only these models are shown. Nevertheless, for more factors, the models are similar. The models for two (x1 and x2) and three factors (x1, x2 and x3) are given by Equations 1 and 2, respectively.

In Equations 1–2, y is the response; β0 the intercept; β1, β2 and β3 the main factor coefficients; β12, β13, β23 and β123 the two- and three-factor interaction coefficients; and β11, β22 and β33 the quadratic coefficients.5 Usually, the interaction effect terms are restricted to two-factor interactions, as is shown below. This is because higher-order interactions are considered negligible.

From the response surface design results, these β-coefficients are estimated as b-coefficients. For two (Equation 3) and three (Equation 4) factors, this results in the following (calculated) models.

In Equations 3–4, ŷ is the predicted response from the model; b0 the estimated intercept; bi, bij and bii, the calculated main factor, two-factor interaction and quadratic coefficients; and ε the residual.5

The b-coefficients are estimated using regression techniques, relating the N × 1 response vector y, to the N × t model matrix X,5 with N being the number of design experiments and t the number of terms included in the calculated model. For example, in Equation 3, t equals six, because one intercept, two main effects, one interaction and two quadratic effect terms were estimated. Similarly, in Equation 4, t equals 10.

Table 1(a): A circumscribed central composite design for two factors (x1 and x2) with 11 experiments, of which three are centre point replicates (exp 9â11).

The model matrix X is derived from the N × (t–1) design matrix by adding in front a column of ones (Table 1). The design matrix contains the coded factor levels and columns of contrast coefficients, as defined by the chosen experimental design. The coded factor levels are, for example, –α, –1, 0, +1 and +α for circumscribed central composite designs and the columns of contrast coefficients are obtained by multiplying the relevant coded factor levels. For example, Table 1, illustrates how the model matrix X [Table 1(b)] is derived from a central composite design for two factors with 11 experiments, of which three centre point replicates (exp 9–11) [Table 1(a)].

Table 1(b): Its model matrix X. In the last column, simulated resolution response values are given.

In matrix notation, the model is represented as follows:

where ß is the t × 1 vector of regression coefficients (e.g., β0, β1, β2, β12, β11, β22 in Equation 1) and ε is an N × 1 error vector. The coefficients b (e.g., b0, b1, b2, b12, b11, b22 in Equation 3) are then usually estimated with least squares regression,5

where XT is the transpose of the model matrix X. Consider the data of Table 1, which contains simulated values for the response resolution between two enantiomers, which elute in the same sequence in all experiments.

One should take care in modelling resolution. Usually it is not recommended unless only two peaks are of interest, which, moreover, always elute in the same sequence. We will discuss this in more detail in this paper. The calculated model for this data was found to be

Besides least squares, other regression techniques can also be used to estimate the b-coefficients.5 However, this is not done frequently.

The response surface representing the model can be visualized graphically by drawing 2D contour plots or 3D response surface plots.5 In a 2D contour plot, the isoresponse lines are shown as a function of the levels of two factors, while in a 3D response surface plot the response is represented on a third dimension, as a function of the levels of two factors. An example of both plots, representing Equation 7, is shown in Figure 1. When more than two factors are examined and modelled, all but two factors need to be fixed at a given level to draw both plots. This will be explained further.

Figure 1

Determination of the optimum for one response. Then, subsequently the model is used to determine either the optimum or acceptable experimental conditions. In this section, it is assumed that only one response needs to be optimized. Selecting acceptable conditions can be done in two different ways.

A first approach uses the model to predict the response at given experimental conditions, selected by the analyst for specific reasons. A second and more frequently applied approach determines and selects the optimum from the graphical representation (Figure 1) of the model. When more than two factors are examined, all but two factors need to be fixed at a given level to draw both plots. For three factors, at least three 2D or 3D plots can be drawn and interpreted (i.e., one plot for each combination of two factors while fixing the third at a given level). For four or more factors, which is luckily a rarely occurring situation, drawing and interpreting the graphical representations will become even more cumbersome.

Usually, the least important factor(s) is/are fixed. However, one should be aware that the response surface visualized is only a fraction of the global response surface. Occasionally it might be interesting to draw response surfaces at several levels of the fixed factor, for example, at low, intermediate and high level.

Consider the response resolution from Table 1. A high or baseline resolution is optimal. To find the optimum from a graphical representation, the part of the experimental domain with the highest response values should be determined. For example, from Figure 1, it is seen that the highest resolution values are obtained when both factors are at low level. To evaluate the robustness of the response in the optimal area, in a 2D contour plot, the density or closeness of the isoresponse lines is evaluated, whereas in a 3D response surface plot the steepness of the response surface is examined. The denser the isoresponse lines are or the steeper the response surface is, the less robust the response is in that area of the experimental domain.

It also needs to be mentioned that often in optimization the best conditions are found near the boundaries of the experimental domain, as is also the case for our example (Table 1 and Figure 1). The reason is that most responses have a continuously increasing or decreasing behaviour as a function of the factor levels. It needs to be emphasized that, regardless of the applied approach to determine the best conditions, only predictions within the examined experimental domain are recommended. Extrapolations should be avoided, because the probability, that the model is incorrect, will increase.5

After selecting the optimum, it is experimentally verified whether these experimental conditions indeed result in an acceptable response and, for example, allow a sufficient resolution between the two peaks.

Model validation. In a next step, the model is occasionally validated and the fit of the model to the experimental data evaluated. Different approaches to validate the model exist.5 Most frequently analysis of variance (ANOVA) is applied to evaluate the data set variation and to determine the significance of the terms included in the model. Occasionally, the model is then recalculated with the least non-significant terms iteratively deleted.

Tests for the significance of regression and for lack-of-fit are also often performed. An adequate model that fits the data well then results in both a significant regression and a non-significant lack-of-fit.

Another possibility to evaluate the model is by performing a residual analysis, where the experimental response and the response predicted by the model are compared for each design experiment. Large residuals or tendencies in the residuals then indicate that the model is not adequate and should be revised.

To evaluate the predictive properties of the model, although rarely done in a method optimization context, an external validation can be made using an external test set, consisting of experiments at other conditions than those of the design. Again the experimental and the predicted responses are compared and the residuals evaluated.

Though model validation is crucial for models specifically built to predict sample properties (e.g., calibration lines or multivariate calibration models) it is done relatively rarely in method optimization. It is also less beneficiary in this context. In optimization, the model is used to indicate a suitable experimental region and this region usually does not change dramatically by using, for example, a model without the non-significant coefficients.

Multiresponse Optimization

When several responses need to be optimized simultaneously, the area in the experimental domain, where an acceptable consensus between all responses occurs, needs to be determined. For this purpose, multi-criteria decision-making (MCDM) methods can be applied.5 Several MCDM methods exist, for example, the overlay of contour plots, the Pareto optimality method6 and the Derringer's desirability functions.7

A first approach makes an overlay of contour plots to determine the feasible region in which the considered responses are all acceptable or desirable. For example, in Figure 2, the feasible area for two responses (i.e., resolution >1.5 and analysis time <10 min) in the experimental domain of two examined factors, x1 and x2, is shown.

Figure 2

This approach is most practical when it concerns two factors to optimize, because for more factors one gets an incomplete picture because some factors need to be fixed at a given level. Regarding the number of responses that can be simultaneously optimized, there is, in principle, no limit. However, the more responses to be optimized, the smaller the chance of finding a feasible region where all are acceptable.

A second approach is to use the Pareto optimality method.6 An experiment is considered Pareto-optimal when no other experiment exists with a better result for one response without having a worse for another. To explain this approach, consider the data of Figure 3, where two responses (i.e., y1 = analysis time and y2 = resolution) were determined for nine experiments, for example, from a central composite design for two factors. The analysis time should be minimized and the resolution maximized. The experiments 5, 2 and 8 are Pareto-optimal.

Figure 3

This approach is most practical when it concerns two responses. It can be applied for more responses, although this leads to a less straightforward graphical interpretation (3 responses) or cannot be represented anymore. It should also be remarked that a Pareto-optimal point is not always representing a practically suitable optimum. For instance, experiment 5 is Pareto-optimal, but has a too low resolution to be useful.

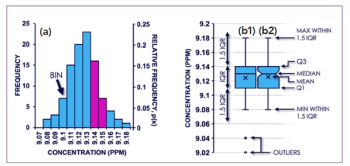

Figure 4

A third approach consists of using Derringer's desirability functions,7 where all responses are transformed on a same scale and combined to one response, which should be maximal. The transformed responses, called desirabilities, di, are situated on a scale between 0, representing a completely undesirable response, and 1, representing a desirable response. Different transformations are used, depending on whether the optimal response is maximal, minimal, or at a given predefined value (Figure 4). Usually, linear transformations are used, as in Figure 4. Then the combined response or the global desirability, D, is calculated as the geometric mean of the R individual desirabilities, di (Equation 8). Suitable conditions should be found where D is approaching 1.

To the di values in Equation 8 occasionally weights can be given by raising them to a given power, pi. Most frequently pi = 1, as in Equation 8. For more detailed information concerning transformations and weights, we refer to references 7 and 8. Determining conditions with a maximal D value can be done either by selecting the design experiment with the largest D value, or by modelling D as a function of the examined factors and visualizing its response surface by means of a contour plot or a response surface plot.

The separation of several compounds in chromatography, for example, when creating a drug-impurity profile, can also be considered a multi-criteria optimization problem. When more than two peaks need to be separated, several resolutions between consecutive peaks can be determined. The goal is to find the conditions at which the worst-separated peak pair is best separated (i.e., the conditions at which the smallest resolution is maximal). However, for this specific problem, the above MCDM methods are not applied.

As mentioned above, modelling the resolution is also not usually recommended. It can be done when there are only two peaks, which in the entire experimental domain elute in the same sequence. For more than two peaks, it becomes difficult to model resolution correctly. First, because many resolutions can be considered (i.e., between all peak pairs) but only those between consecutive peaks are of interest and, moreover, within the experimental domain the consecutive peak pairs may vary. Secondly, a calculated resolution does not distinguish situations with a similar separation degree but a reversed elution order. Between both situations, resolution is not constant but goes through zero somewhere. Using both positive and negative resolutions might solve this latter problem, but will not solve the first.

Therefore, the most appropriate approach to predict and model resolutions, for example, at given experimental domain conditions consists of first modelling the retention of the peaks as a function of the factors examined and occasionally also the peak width in case of isocratic elution. Then the resulting models allow predicting the retention time of each peak at intermediate conditions. By sorting these predicted retention times and their corresponding peak widths at each given set of conditions, resolutions between consecutive peaks can be calculated and the minimal resolution, Rsmin, determined. These Rsmin then can be plotted as a function of the examined factors. Finally, those conditions where the minimal resolution is maximal (i.e., where the worst-separated peak pair is best separated) is considered the optimum.

Conclusions

The data analysis of response surface design results and some approaches to simultaneously optimize multiple responses are discussed. Most frequently, a second-order polynomial model is built, for which the coefficients usually are calculated using least squares regression. The response surface, representing the model, can be visualized by drawing contour plots or response surface plots. Usually, the selection of the optimum is done graphically, by evaluating one or both plots.

Three MCDM methods to simultaneously optimize several responses were described (i.e., the overlay of contour plots, the Pareto optimality method and the Derringer's desirability functions). In our opinion, the latter approach is most practical for multiresponse optimization.

Yvan Vander Heyden is a professor at the Vrije Universiteit Brussel, Belgium, department of Analytical Chemistry and Pharmaceutical Technology, and heads a research group on chemometrics and separation science.

Bieke Dejaegher is a postdoctoral fellow of the Fund for Scientific Research (FWO, Vlaanderen, Belgium) working at the same department on experimental designs and their applications in method development and validation, and on the development and data analysis of herbal fingerprints.

References

1. Y. Vander Heyden, LC GC Europe, 19(9) 469–475 (2006).

2. B. Dejaegher and Y. Vander Heyden, LC GC Europe, 22(5), 256–261 (2009).

3. B. Dejaegher and Y. Vander Heyden, LC GC Europe, 20(10), 526–532 (2007).

4. B. Dejaegher and Y. Vander Heyden, LC GC Europe, 21(2), 96–102 (2008).

5. D.L. Massart et al., Handbook of Chemometrics and Qualimetrics: Part A, Elsevier, Amsterdam (1997).

6. A.K. Smilde, A. Knevelman and P.M.J. Coenegracht, J. Chromatogr., 369, 1–10 (1986).

7. G. Derringer and R. Suich, J. Qual. Technol., 12, 214–219 (1980).

8. B. Dejaegher, A. Durand and Y. Vander Heyden, Experimental Design in Method Optimization and Robustness Testing, In G. Hanrahan and F.A. Gomez (Eds), Chemometric Methods in Capillary Electrophoresis, Chapter 2, John Wiley & Sons, New Jersey, pp. 11–74 (2009).

Articles in this issue

about 16 years ago

Are you Ready for a Strong FDA?about 16 years ago

Digital Updateabout 16 years ago

Kinetic Plots Made Easyabout 16 years ago

Event NewsNewsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.