- May 2025

- Volume 2

- Issue 4

- Pages: 14–19

Biopharmaceutical Characterization in the Age of Artificial Intelligence

AI-powered tools are enhancing precision, efficiency, and decision-making in biopharmaceutical development. Recently, Jared Auclair and Anurag Rathore explored AI's evolving role in biopharmaceuticals in detail.

Over the past several decades, advancements in analytical tools have led to the generation of large, complex data sets for the characterization of biopharmaceuticals. Historically, interpreting these datasets has been challenging and often incomplete. However, with the recent widespread adoption of artificial intelligence (AI) which thrives on large data sets—the field of biopharmaceutical analysis is on the brink of a transformative shift. From high-throughput chromatographic and mass spectrometric analysis to deep learning- driven data interpretation, AI-powered tools are enhancing precision, efficiency, and decision-making in biopharmaceutical development. This column explores the evolving role of AI in biopharmaceutical characterization, highlighting breakthroughs in machine learning for complex data analysis, the automation of method development, and AI-driven decision-making in process control. Additionally, we examine the regulatory landscape, including the potential for real time lot release through integrated process analytical technology, as well as the challenges associated with AI adoption in analytical workflows. As AI-driven strategies gain traction, understanding their capabilities and limitations will be critical to the future of drug development, manufacturing, and characterization.

The complexity of biopharmaceutical products (such as monoclonal antibodies and recombinant proteins) requires the use of multiple high-resolution and complementary analytical tools to ensure quality, safety, and efficacy. Thus, over the last several decades, there have been significant advances in analytical tools for the characterization of biopharmaceutical products. Among the key analytical advances is high-resolution mass spectrometry (HRMS), which provides precise structural analysis and enables the detection of post-translational modifications. Advanced chromatography techniques, such as liquid chromatography–mass spectrometry (LC–MS), facilitate the differentiation of biosimilars and allow for detailed characterization of protein heterogeneity. Capillary electrophoresis (CE) has become a valuable tool for impurity profiling and charge variant analysis. Nuclear magnetic resonance (NMR) spectroscopy plays a critical role in higher-order structure analysis and comparability assessments. Additionally, next-generation sequencing (NGS) offers genomic insights that support precision medicine approaches (1).

The analytical characterization of biopharmaceuticals using the tools mentioned above produces vast and highly complex data sets. These data sets capture detailed information about molecular structure, product quality attributes, and potential impurities, but the sheer volume and complexity of the data present a significant challenge in terms of analysis and interpretation. Extracting meaningful insights from such large, multidimensional data sets often requires considerable time, expertise, and manual effort, which can limit efficiency and consistency. At the same time, this data-rich environment presents an opportunity to explore innovative approaches to streamline data interpretation and enhance decision-making. In recent years, conversations across the biopharmaceutical industry have increasingly focused on the potential of artificial intelligence (AI) and machine learning (ML) to revolutionize data analysis, offering new strategies to improve speed, accuracy, and consistency in biopharmaceutical characterization.

The Rise of AI in Biopharmaceutical Analytics

As analytical chemistry continues to generate increasingly large and complex data sets, AI and ML have emerged as transformative tools to help scientists extract meaningful insights and streamline data interpretation. AI refers broadly to computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, pattern recognition, and decision-making. Within this broad field, ML is a subset focused on developing algorithms that can learn from data and improve their performance over time without being explicitly programmed for each task. Deep learning (DL), in turn, is a specialized area of ML that uses multi-layered neural networks to automatically learn and extract patterns from highly complex, high-dimensional data (2).

The integration of AI in analytical chemistry has evolved progressively over several decades. Initial research in the 1950s and 1960s laid the groundwork for AI with basic algorithms designed to simulate human reasoning. By the 1970s, rule-based systems and early AI software were introduced to simulate chemical processes and model reactions. Significant progress was made in the 1980s, when neural networks were applied to analytical chemistry for the first time, enhancing data modeling and pattern recognition. In the 1990s and 2000s, as computational power increased, AI techniques became more sophisticated, enabling automated analysis of large chemical data sets and the development of predictive models. Over the last decade, the emergence of deep learning and big data approaches has accelerated AI adoption in analytical workflows, particularly in fields like proteomics, metabolomics, and process analytical technology (PAT) (2).

In modern analytical chemistry, these technologies are being leveraged to manage the challenges of interpreting vast and heterogeneous data produced by techniques such as mass spectrometry, spectroscopy, and chromatography. AI and ML can automate processes like spectral deconvolution, impurity detection, and method optimization, significantly improving accuracy, speed, and consistency. Deep learning, in particular, excels at analyzing unstructured data—such as complex chromatograms or spectral patterns—that would traditionally require expert human interpretation. In addition, there is continued relevance of chemometrics, the statistical discipline historically used in analytical chemistry to analyze chemical data. Although chemometrics provides interpretable and statistically sound models, the integration of AI and ML expands its capabilities, enabling the analysis of more complex and nonlinear data sets (2).

The growing influence of AI and machine learning in analytical chemistry is not limited to academic research—it is rapidly making its way into the highly regulated and data-intensive world of biopharmaceutical development. As biopharmaceutical products become more complex and analytical data sets grow in size and dimensionality, the need for advanced data analysis tools is increasingly apparent. The same AI-driven approaches that have transformed data interpretation in spectroscopy and chromatography are now being adapted to address critical challenges in biopharmaceutical characterization. In the next section, we will explore how these technologies are being applied across the biopharmaceutical product life cycle—from structural characterization and quality attribute monitoring to process optimization and real-time decision-making—highlighting both the breakthroughs and the barriers that remain.

Applications of AI in Biopharmaceutical Characterization

AI for Data Analysis and Interpretation

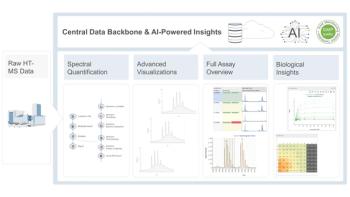

The application of AI and ML has been instrumental in advancing data analysis and interpretation in biopharmaceutical characterization, particularly in handling large, complex data sets generated by analytical methods such as MS and chromatography. Machine learning techniques are being used extensively to recognize patterns, deconvolute overlapping peaks, and extract meaningful information from noisy and high-dimensional data sets (3,4). For example, supervised learning algorithms such as random forest and decision tree models have demonstrated success in predicting critical process attributes from sensor data in downstream processing (5).

In addition, deep learning approaches are being leveraged to advance structural elucidation, especially in the identification of post-translational modifications (PTMs) and other complex molecular features (6). Recent developments have shown that deep neural networks can predict peptide fragmentation spectra, retention times, and ion mobility with high accuracy, which improves the annotation of MS-based proteomics data (3,7). These AI-enabled tools have transformed data interpretation from a manual, expert-driven process to an automated, scalable workflow, allowing for more comprehensive and accurate characterization of biopharmaceutical products.

AI in Method Development and Automation

Beyond data analysis, AI is increasingly being applied to automate and optimize analytical method development. Traditional approaches to method development have relied heavily on manual trial-and-error processes, often consuming significant time and resources. Machine learning models now enable predictive design of experiments (DoE), facilitating automated selection of optimal conditions for chromatography, MS acquisition, and sample preparation (4,8). For example, feedback loops and closed-loop experimentation, driven by ML algorithms, allow researchers to adjust experimental parameters in real-time based on model predictions, reducing the need for extensive manual intervention (7).

Additionally, automated liquid handling systems integrated with ML-guided protocols are being adopted to improve sample preparation workflows. These systems enhance throughput and reproducibility, while AI models evaluate the impact of experimental variables on analytical outcomes, further driving method robustness (4).

AI-Driven PAT and Real-Time Decision-Making

One of the most promising areas of AI application in biopharmaceutical characterization is within PAT frameworks, where real-time monitoring and control of manufacturing processes are essential. AI models, particularly ML algorithms, are being integrated into PAT systems to predict critical quality attributes (CQAs) and critical process parameters (CPPs) in real time (8,9). In continuous manufacturing of monoclonal antibodies (mAbs), for instance, ML models such as random forests have been effectively used to predict process attributes like chromatography performance based on real-time sensor data, achieving high prediction accuracy and low error rates (5).

This integration of AI enables dynamic process control and the possibility of realtime release testing (RTRT), reducing reliance on traditional end-product testing. AI-driven PAT frameworks align well with quality-by-design (QbD) principles by supporting risk-based control strategies and continuous process verification (9). However, challenges remain, including ensuring model interpretability, data quality, and regulatory acceptance of AI-driven decision-making in GMP environments.

Regulatory Landscape and Industry Perspectives

The integration of AI and ML technologies into biopharmaceutical characterization is transforming drug discovery, development, and manufacturing. However, this rapid adoption is occurring within a highly regulated industry where patient safety, product quality, and data integrity are paramount. Regulatory agencies around the world are actively developing frameworks to manage both the opportunities and risks associated with AI-enabled analytical workflows.

In the United States, the Food and Drug Administration (FDA) has issued draft guidance focused specifically on the use of AI to support regulatory decision-making across the drug product life cycle (10). This guidance establishes a risk-based credibility assessment framework that requires sponsors to document the purpose, risk level, and validation strategy for any AI model used to support regulatory submissions. Importantly, the guidance emphasizes that AI systems used in manufacturing, analytical method development, and quality control must maintain transparency, reproducibility, and fitness for purpose. In parallel, the FDA has established an internal AI Steering Committee to coordinate AI initiatives across its centers (11). This includes oversight of AI applications in areas such as post-marketing surveillance, clinical trial monitoring, and manufacturing process control.

Internationally, similar efforts are underway. The European Medicines Agency (EMA) and the Heads of Medicines Agencies (HMA) have issued a five-year work plan to guide the responsible use of AI in medicines regulation, focusing on riskbased approaches, workforce training, and ethical use (12). Other regulatory bodies in countries like Canada, China, Japan, and the United Kingdom are also advancing policies to ensure the safe and equitable use of AI in healthcare, often emphasizing transparency, explainability, and fairness.

Despite this progress, the regulatory landscape remains fragmented, and several challenges persist. A key concern is the “black box” nature of many advanced AI models, particularly deep learning algorithms, which complicates transparency and interpretability in regulatory decision-making (12). Regulators are also focused on ensuring that AI models used in analytical workflows are free from biases introduced by incomplete or non-representative training data sets (11). In biopharmaceutical manufacturing, for example, biased models could lead to incorrect assessment of critical quality attributes, potentially affecting product safety and efficacy.

Additionally, regulatory agencies are grappling with the need to maintain the credibility and reliability of AI outputs over time, particularly in dynamic manufacturing environments where processes and data inputs can evolve. The FDA’s draft guidance specifically requires life cycle maintenance of AI model credibility, including re-validation when model inputs or contexts of use change (10).

Privacy and data governance are also critical regulatory concerns. AI-driven analytical tools often require access to large volumes of sensitive clinical, process, or patient data. Regulators emphasize compliance with data protection laws and advocate for the ethical use of data in AI applications (11,12).

Finally, there is growing recognition that conventional regulatory review processes may not be well suited to evaluate novel AI-generated analytical methods. This challenge has been described as the “Move 37 Conundrum”—a reference to AI-generated outcomes that are unprecedented and difficult to evaluate using existing regulatory frameworks (11). Agencies like the FDA are encouraging early engagement from sponsors to discuss AI model development and validation plans, and to foster greater regulatory-scientific collaboration.

Together, these emerging regulatory frameworks reflect an evolving consensus: AI can bring significant efficiency and quality improvements to biopharmaceutical characterization, but it must be implemented within a transparent, risk-based, and ethically sound regulatory structure.

Challenges and Limitations

While AI and machine learning (ML) hold immense promise for advancing biopharmaceutical characterization, several challenges and limitations must be addressed to fully realize their potential. One of the foremost concerns is the quality and representativeness of the data used to train AI models. Analytical data sets in biopharmaceutical development are often large, complex, and heterogeneous, yet may still contain gaps, inconsistencies, or biases. These data limitations can result in AI models that perform well under controlled conditions but fail to generalize across different manufacturing scenarios or product types (3,11). In particular, under-representation of certain process conditions, molecular attributes, or population-specific variables in training data can introduce unintended biases, leading to unreliable or inequitable outcomes.

Another significant limitation is the lack of transparency and explainability in many AI models, particularly those based on deep learning architectures. These “black box” systems may produce highly accurate predictions but offer limited insight into how conclusions are reached, complicating regulatory review and industry acceptance (11,12). In regulated environments such as pharmaceutical manufacturing and quality control, stakeholders must be able to interpret and justify AI-driven decisions, especially when they pertain to product quality, safety, or compliance.

Operational challenges also exist. Implementing AI systems requires substantial technical expertise, infrastructure, and cultural change within organizations. Many biopharmaceutical companies face barriers to integrating AI models into established quality systems and manufacturing workflows, particularly given the industry’s cautious approach to adopting new, unproven technologies (8,9). Moreover, AI models require continuous monitoring, maintenance, and periodic revalidation to remain credible and compliant over the product life cycle (10).

Looking forward, the future of AI in biopharmaceutical characterization will depend on addressing these limitations while advancing technical capabilities and regulatory clarity. Several recent initiatives point to a more structured and risk-based regulatory approach. Both the U.S. FDA and the EMA are developing frameworks to promote responsible AI use, emphasizing transparency, risk assessment, and model validation (11,12). Collaborative efforts between regulators, industry, and academic researchers are crucial to ensure that AI technologies are implemented safely, effectively, and equitably.

In parallel, emerging AI techniques—such as explainable AI, federated learning, and synthetic data generation—offer promising solutions to current limitations. For instance, synthetic data sets generated by AI models can be used to benchmark analytical workflows without compromising sensitive patient or proprietary data (7). Furthermore, as AI models become more integrated across the product life cycle—from analytical characterization to real-time process control—there is potential to move toward real-time release testing and continuous manufacturing models that could dramatically reduce costs and accelerate product availability (9).

Ultimately, while challenges remain, the convergence of technical innovation, regulatory engagement, and ethical oversight will shape a future where AI-driven analytical tools are a trusted and integral component of biopharmaceutical development. Thoughtful adoption, grounded in scientific rigor and transparency, will be essential to ensure that these technologies deliver on their promise to improve efficiency, quality, and patient outcomes.

Future Outlook

The adoption of AI and ML in biopharmaceutical characterization is poised to accelerate the pace and precision of drug development. By enabling rapid interpretation of complex analytical data and facilitating real-time monitoring of critical quality attributes, AI technologies have the potential to significantly improve both the efficiency and accuracy of biopharmaceutical workflows (3,11). As next-generation analytical platforms evolve, AI-driven models will play a pivotal role in enhancing process understanding, reducing development timelines, and lowering production costs—key drivers in the ongoing effort to make biotherapeutic products more accessible and affordable (13).

One of the most significant shifts anticipated in the coming years is the movement toward greater automation and reduced human intervention across analytical and manufacturing processes. Advances in AI and data analytics will facilitate the development of smart, integrated analytical platforms capable of real-time monitoring and control, aligning with the broader transition to Industry 4.0 and 5.0 paradigms (13). This evolution will likely see a substantial reduction in offline testing and manual data interpretation, replaced by inline, at-line, and online monitoring strategies supported by robust, validated AI models (9). As demonstrated in recent studies, integrated AI-enabled systems can support continuous bioprocessing and real-time release testing, minimizing batch failures and ensuring consistent product quality (5,8).

However, this future is not without challenges. The biopharmaceutical industry will need to carefully balance the drive for technological innovation with the demands of regulatory compliance, data integrity, and scientific rigor. Regulatory agencies are increasingly recognizing the transformative potential of AI but continue to emphasize the need for transparency, model explainability, and risk-based governance frameworks (10,12). Ensuring that AI-driven tools meet these standards—while maintaining flexibility to adapt to new data and process changes—will be essential to secure regulatory acceptance and public trust.

Looking ahead, the successful integration of AI into biopharmaceutical characterization will require close collaboration between industry, regulators, and academic researchers. Investments in workforce training, infrastructure, and interdisciplinary partnerships will be critical to building the knowledge base and technical capacity needed to support responsible AI adoption. If implemented thoughtfully, AI-enabled analytical platforms will not only streamline biopharmaceutical development but also contribute to a broader transformation of the industry—bringing safer, more effective, and more affordable medicines to patients worldwide.

Conclusion

AI and ML are rapidly reshaping the landscape of biopharmaceutical characterization, offering powerful tools to manage the growing complexity of analytical data and manufacturing processes. From enhancing data interpretation and automating method development to enabling real-time monitoring and decision-making, AI has the potential to transform how biopharmaceutical products are developed, manufactured, and controlled. These technologies promise to improve efficiency, increase precision, and reduce costs, ultimately supporting the delivery of safer and more effective medicines to patients.

However, the successful integration of AI into biopharmaceutical workflows requires careful and responsible adoption. It is essential to ensure that AI models are transparent, scientifically sound, and compliant with regulatory expectations. Industry leaders must prioritize data quality, model validation, and ethical considerations to safeguard product quality and patient safety.

Moving forward, collaboration will be key. Continued dialogue between scientists, data scientists, and regulators will be critical to establish clear guidelines, share best practices, and build trust in AI-enabled systems. Together, these stakeholders can ensure that the transformative potential of AI is realized in a way that advances innovation while maintaining the highest standards of quality, compliance, and patient care.

References

(1) Khan, M. Innovations in Analytical Methodologies for Biopharmaceutical Characterization. AZo Life Sciences webpage.

(2) Rial, R. C. AI in Analytical Chemistry: Advancements, Challenges, and Future Directions. Talanta 2024, 274. DOI:

(3) Dens, C.; Adams, C.; Laukens, K.; et al. Machine Learning Strategies to Tackle Data Challenges in Mass Spectrometry-Based Proteomics. J. Am. Soc. Mass Spectr. 2024, 35 (9), 2143–2155. DOI:

(4) Movassaghi, C. S.; Sun, J.; Jiang, Y. M.; et al. Recent Advances in Mass Spectrometry-Based Bottom-Up Proteomics. Anal. Chem. 2025, 97 (9), 4728–4749. DOI:

(5) Nikita, S.; Thakur, G.; Jesubalan, N. G.; et al. AI-ML Applications in Bioprocessing: ML as an Enabler of Real Time Quality Prediction in Continuous Manufacturing of mAbs. Comput. Chem. Eng. 2022, 164. DOI:

(6) Mann, M.; Kumar, C.; Zeng, W. F.; et al. Artificial Intelligence for Proteomics and Biomarker Discovery. Cell Syst. 2021, 12 (8), 759–770. DOI:

(7) Neely, B. A.; Dorfer, V.; Martens, L.; et al. Toward an Integrated Machine Learning Model of a Proteomics Experiment. J. Proteome Res. 2023, 22 (3), 681–696. DOI:

(8) Puranik, A.; Dandekar, P.; Jain, R. Exploring the Potential of Machine Learning for More Efficient Development and Production of Biopharmaceuticals. Biotechnol. Progr. 2022, 38 (6). e3291. DOI:

(9) Chaudhary, S.; Muthudoss, P.; Madheswaran, T. et al. Artificial Intelligence (AI) in Drug Product Designing, Development, and Manufacturing. In A Handbook of Artificial Intelligence in Drug Delivery; Elsevier, 2023; pp 395-442.

(10) FDA, U. Considerations for the Use of Artificial Intelligence to Support Regulatory Decision-Making for Drug and Biological Products Guidance for Industry and Other Interested Parties.

(11) Singh, R.; Paxton, M.; Auclair, J. Regulating the AI-Enabled Ecosystem for Human Therapeutics. Commun. Med. (in press).

(12) Mirakhori, F.; Niazi, S. K. Harnessing the AI/ML in Drug and Biological Products Discovery and Development: The Regulatory Perspective. Pharmaceuticals (Basel) 2025, 18 (1) 47. DOI:

(13) Rathore, A. S.; Sarin, D. What Should Next-Generation Analytical Platforms for Biopharmaceutical Production Look Like? Trends Biotechnol. 2024, 42 (3), 282–292. DOI:

About the Authors

Articles in this issue

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.