E-Separation Solutions

- E-Separation Solutions-11-23-2010

- Volume 0

- Issue 0

Data Handling/Software

Chromatographers rely on software for many laboratory functions, including method development, instrument control, data acquisition and analysis, and data archival. Participants in this Tech Forum are Tony Owen of Agilent Technologies, Damien Rosser of ALMSCO International, David Chiang of Sage-N Research, and Mark Harnois of Waters Corporation.

Chromatographers rely on software for many laboratory functions, including method development, instrument control, data acquisition and analysis, and data archival. Participants in this Tech Forum are Tony Owen of Agilent Technologies, Damien Rosser of ALMSCO International, David Chiang of Sage-N Research, and Mark Harnois of Waters Corporation.

What trends are becoming apparent with software for chromatography laboratories?

Owen: Standardization and consolidation resulting in a reduction of suppliers to the industry. Today there are four main suppliers of chromatography data systems (CDS) left: Thermo, Waters, Agilent, and Dionex. Control of instrumentation from multiple vendors has become standard functionality — enabling the customers to more freely select software and instrument suppliers. Easier interoperability and generic data formats are forming and will serve a key customer need for easier data evaluation and data mining across multiple laboratories or even sites. The support or openness to standard data formats will be the key to future growth in the CDS market.

Rosser:User environments that are intuitive, to extract highest productivity from chromatographic hardware without incurring additional learning curves. Software that can ease, or even obviate, human chromatographic review, so that increased sample throughput is not compromised. Data mining capabilities that can extract more, and better, information from complex data sets.

Chiang:One of the problems currently being faced is the lack of computing power in laboratories. Researchers are unable to get all of their data processed fully as they either lack the needed computing resources or they have inadequate algorithms that are oriented to being efficient rather than comprehensive. Many scientists agree they have valuable data locked up inside large datasets but with no way to get the results out. There is also the danger of data loss or errors being made in the early stages of discovery. Both these problems can be highly troublesome to the entire process of bringing new drugs to the market.The big challenge is the geometric explosion of data. This has required software to adapt in several ways. The trend has seen a change from PC to server software in order to achieve the robustness and throughput required. It is no longer feasible to have a technician sit there and click on menus where the datasets are so large. PC software, designed for manual interrogation of simpler datasets, is no longer sufficient, and required robust server-class statistically solid algorithims to search the haystack to find the needles.

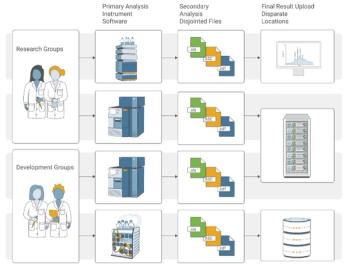

Harnois:Due to merger and acquisition activity across a variety of markets, there has been a greater desire for software standardization onto fewer platforms. Companies are looking for ways to consolidate their laboratory information management system (LIMS), electronic laboratory notebook (ELN), CDS, and other software onto a single global platform as a means to optimize business operations. For example, within the pharmaceutical industry, a decrease in new drug applications and an increase in product recalls mean that it is just not effective to run the same legacy workflows. Companies are looking for chromatography software solutions that can streamline method development and validation, facilitate regulatory compliance, improve global communication and reduce total cost of ownership..

What type of software has most changed the way analysts perform their jobs?

Owen:The availability of content management solutions and intelligent interfaces (for example, between LIMS and CDS) has most changed the way people do their jobs because the combination of these tools with the existing CDS and LIMS landscape allows to transfer operations to a paperless laboratory — reducing the number of manual data transfer steps and thus also man-made errors.

Rosser:For chromatographers that use mass spectrometry (MS), there have been huge improvements in peak integration software. Many software platforms contain integration algorithms originally intended for single-channel data streams, like flame ionization or fluorescence detectors. Reviewers of gas chromatography–mass spectrometry (GC–MS) or liquid chromatography–mass spectrometry (LC–MS) data have been encumbered by the need to conform to a rigid empirical parameter system that is often unreliable in complex matrix analysis, demanding manual — and therefore subjective — intervention. MS data files contain so much latent information that an alternative, parameter-free approach to peak integration can be achieved. It is important that chromatography providers move away from empirical peak detection parameters to create a simplified user interface that can scale up to the demands of higher sample throughput.

Chiang:Proteomics technology is important as it is the only solution for characterizing posttranslational modifications (PTMs) — such as phosphorylation — alongside gene expression for vital cancer and stem cell research. Due to the important role of proteomics in cancer research, the critical nature of the data generated and the need for high-throughput analyses, an effective IT infrastructure with data storage and handling capabilities must be in place to ensure data integrity and safety. It is not feasible to use the software that once handled a handful of proteins in a simple gel spot for the full proteome of organelles. Proteomics analysis is now akin to software used by hedge funds to uncover hidden market trends, much like proteomics, search engines are used to uncover low-abundance peptides and proteins and their post-translational modifications.

Harnois:Undoubtedly, chromatography data systems have changed the way chromatographers work to improve their productivity in method development and validation, compliance, data review and collaboration. However, the impact of ELNs on laboratory operations is perhaps even more profound — these software applications improve the effectiveness of analysts who leverage a broad range of analytical technologies not simply chromatography systems. We are starting to see that ELNs are not just laboratory journals, but an electronic environment for controlling laboratory workflows much like a CDS, but for more of the laboratory.

What analytical techniques have benefited the most from software applications?

Owen:I think the hyphenated high-end LC–MS-MS and GC–MS-MS techniques have taken most benefit from the enhanced application-based offering in the MS software platforms. The amount of qualitative information that for instance a Q-TOF system can provide is enormous and it takes very smart and powerful software to extract the key information from the acquired data.

Chiang:Mass spectrometry. The large amounts of data generated by high-throughput mass spectrometers can make finding the data needed to make the big drug discoveries difficult. The nature of the data analysis needed for proteomics is changing, now being more like a hedge fund mining data than an administrative assistant running an Excel spreadsheet. With this in mind, specialized IT solutions that integrate hardware and software have been developed by high-end proteomics product companies. These systems offer a proteomics workflow that ensures safe storage and retrieval of proteomics data, effective back-up and recovery, and smoother data analysis.

Harnois:I think that analytical techniques having the highest data processing requirements such as MS and NMR have benefited the most from software applications. For example, MS has benefited from both the data acquisition side with rapid chromatography methods for LC–MS-MS and from the data processing side with tremendously powerful data deconvolution capabilities. LC–MS and LC–MS-MS data processing that would have taken days to weeks previously now can be completed in a matter of minutes in a wide range of applications including proteomics, metabonomics, bioanalysis, and so forth. The greater software power isn’t just benefiting the MS specialist, but also the chromatographer because a combination of software and hardware improvements is making LC–MS and LC–MS-MS accessable to the chromatographer within many CDS solutions. .

In last year’s Data Handling/Software Tech Forum, ELN software was identified as a growth area. Does it continue to be an important area?

Owen:Absolutely. The need for an increase in research efficiency remains unchanged and the ELN is a key tool to get your efficiency up.

Rosser:

Yes, we would hope so. In fact, platform-neutral data mining products have been developed that already embody a data analysis and reporting environment that complements the ELN project-based hierarchy.It is important that experiments are documented and results used in a timely manner. Storing information electronically reduces the errors associated with transcribing from a paper source. Data analysis and data mining reports also fall into this category. As part of GLP, the reports generated can provide valuable information about analytical method, compounds reported and chosen search criteria, for example.Electronic reporting will always be an important part of any laboratory quality system. The main difficulty has been with ease of use and availability in some work environments.

Harnois:Market predictions are always hard to make, but yes I would agree that the growth prospects for ELN software are bright as many of the historical barriers to ELN adoption are fading. For example, initially discovery organizations hesitated in moving toward ELNs because of intellectual property concerns. In recent years, however, their own legal departments have articulated a preference for the electronic traceability capabilities ELNs bring to bear on the defense or prosecution of IP. Likewise, QC departments, traditionally even more conservative than discovery, are beginning to utilize ELNs as a mechanism to improve quality through the implementation of electronic worksheets that control laboratory workflows.

What new software developments do you expect to see in the upcoming year?

Owen:More modern, web-based front-end applications, support for virtualized systems (for example, VMWare), cross-technique generic data formats, cloud computing becoming an accepted alternative.

Rosser:Several markets require an additional tier of processing software such as fingerprinting and chemometry to classify complex data sets. Many chromatography vendors have avoided this area because it calls on unusual software skills, or is deemed too high-risk for commercial gain. This has been left to the lab or institution, rather than embraced by instrument manufacturers. Up to now, comprehensive fingerprinting of GC–MS (and LC–MS) data has mainly been based on time-aligned line chromatographic data where a substance is represented by a single ion profile. There are several open-source applications doing this. Fingerprinting could be greatly enhanced by introducing fully deconvolved substance spectra prior to time-alignment.

Chiang:Within proteomics analyses, there is a need for an integrated software workflow that can identify peptides/proteins, identify their PTMs (particularly phosphorylation), and quantify the samples. The pieces are all there, but the integration can be difficult, especially for high sensitivity, statistically sold analyses. We can return to the hedge fund comparison to see how an efficient proteomics data analysis works. On one hand there is the high-value trader who deals with high-value information who uses their deep expertise in currency exchanges with the data analysis to discover new potential trades. On the opposite side there are large computer servers and storage systems that run semicustom software to slice and dice the data to find the billion-dollar hidden trend.

The evolution of software products from point solutions to a robust, server-class platform with flexibility for customization will be very important. Discovery proteomics should have the biologist focusing on the science and let the specialized IT experts handle the backroom servers and integrated storage systems. This would be a great improvement on how many labs are run today, with many people focusing on running the computers rather than doing scientific work. The combined “computing appliance plus support” model is an excellent fit for translational proteomics, allowing scientists to focus on the science and external companies to handle the backroom proteomics IT analysis, and workflow customization.

Harnois: One of the reasons I enjoy being involved with software development and commercialization is that there is a variety of new applications emerging each year that help improve workflows in science driven organizations. A clear trend I see right now is the scaling of laboratory software, such as CDS, toward enterprise deployments to better standardizes global laboratory operations. Technologies such as virtualization and database grid computing will facilitate the implementation of these deployable CDS solutions, assisting with load balancing and system failures. In particular, I think virtualization is a technology to watch since it is the first step towards cloud computing of software systems such as ELNs.

If you are interested in participating in any upcoming Technology Forums please contact Group Technical Editor

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.