The LCGC Blog: Full Method Validation Is Still a Glaring Deficiency in Many Forensics Laboratories

The deficiency in method validation for forensics laboratories regularly manifests itself in two steps.

I am flummoxed at the number of times I have encountered documentation of measurements made by public and private forensics laboratories for blood alcohol or drugs of abuse that does not include proper method validation. Over the years, I have been doing some consulting, reviewing what are called discovery documents associated with myriad different cases along these lines. I have also written previously, now more than six years ago, about an apparent lack of understanding of the importance of method validation by lawyers and judges involved in litigation involving forensics measurements (1).

With each case I have worked on, it becomes apparent there are common deficiencies regularly encountered in forensics analysis. Rarely have I been asked to review a case where I felt a comprehensive and completely reliable job had been performed. Of course, I am sure there are forensics laboratories out there that “do it right,” but somehow records from those cases do not often cross my desk.

The deficiency in method validation for forensics laboratories regularly manifests itself in two steps. The first step is where the forensics laboratory does not even provide any indication of method validation when delivering documentation of the case. The second step comes when the documentation made available indicates a lack of rigor in method validation.

The most complete guidance for forensics method validation is the recently published ANSI/ASB Standard 036 (2). Standard 036 replaces previous comprehensive guidance documentation from the Scientific Working Group for Forensic Toxicology (SWGTOX) and provides all of the necessary steps for full and partial method validations pursuant to ISO 17025 performance criteria. ISO 17025 is more of a catch-all guidance on best practices and provides less specific detail about how to accomplish forensic method validation than Standard 036 does.

Overall, method validation is a series of documented steps that demonstrate that the chemical analysis method on a particular instrument is fit for purpose—that, with proper calibration and quality control, the method can provide a reliable result. Any chemical analysis method, be it widely used or not, must be fully validated on the instrument where it will be performed.

Analytical instruments are exceedingly high precision measuring devices; ultimately, each is manufactured separately (there are also many different manufacturers) and may perform differently, to some degree. Thus, simply saying that since the method is run on this type of instrument at another laboratory, does not preclude the need to validate a particular instrument to run that method reliably.

Forensics laboratories should be able to provide details regarding instrument installation, and that the system met manufacturer specifications for performance once installed. Following that, full method validation should have been performed and been well documented prior to the analysis of any case samples. Full or partial validation should also be performed following major instrument changes or maintenance. For example, replacing or changing an analytical separation column can have a major impact on method performance; the method performance needs to be rechecked using method validation procedures, and redocumented.

Those of you in the analytical science community are likely well familiar with the concept of method validation. If you have developed a new quantitative method, it is almost impossible to publish it without extensive validation information. Whether using guidance from Standard 036, or some other guidance from, for example, the Food and Drug Administration or the Environmental Protection Agency, method validation will regularly involve determining the accuracy, precision, limits of detection and quantification, specificity, potential for carry-over, and other figures of merit for the method. Processes to determine these are given using specific blank, fortified (in other words, spiked), and real samples, run multiples of times, and data treated to provide each measure. The extensiveness of validation can be somewhat dictated by the complexities of the method. For example, sample preparation procedures that contain multiple steps should be evaluated for their efficiencies and recoveries to help ensure that an accurate result is rendered.

A problem I regularly encounter is the lack of adequate evaluation of matrix-matched samples during method validation. A variety of steps should involve the analysis of blank or fortified matrix-matched samples to evaluate the specificity of the method and its subjectivity to matrix effects or changes in the analysis exerted by the presence of interferences in the sample. Chemicals other than those of primary interest for the determination in a sample (in other words, the sample matrix) can alter the final signal of the target analyte in many different ways.

Blood drug determination by liquid chromatography–mass spectrometry is famously prone to matrix effects associated with ionization efficiency. That is why stable isotopically labeled internal standards are a must for those determinations. Yet, matrix components for many types of chemical instrumental measurements can exert effects throughout the process from sample preparation, through chemical separation, to final detection of signals. The method validation steps involving the measurement of fortified matrix samples are key for establishing lack of interferences (in other words, specificity).

Here is another example. It is often desirable to routinely use neat solutions of target analytes to prepare calibration curves for quantitation of a chemical in a biological fluid sample. In such a case, it needs to be shown during method validation that equivalent calibration results can be obtained from neat solution vs. from biological fluid; the absence of matrix effects needs to be demonstrated. This is not an onerous task, but without this information, you would not know if the results were subject to some bias.

Sometimes, matrix effects can be subtle. Five years ago, I wrote about a case where matrix effects yielded statistically significant differences in the response of an n-propanol internal standard, when measured from neat solutions compared to when it was analyzed from real blood samples, for blood alcohol measurements (3). This matrix effect appeared to, on average, cause all blood alcohol determinations on that instrument to be reported 20% higher than they likely were. The laboratory in question never used fortified matrix samples in their validation, thus, they had no knowledge of this bias. The sad thing is that I have encountered this instrument again a couple of years ago. I was able to show the same matrix effect and bias in the data, as I had seen years prior. That instrument has likely been reporting high biased blood alcohol results since its initial routine use in 2011.

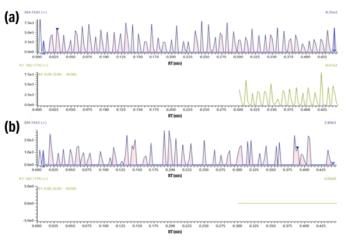

Another common problem with the lack of evaluating blank or fortified matrix-matched samples during blood alcohol measurement validation and routine use is that specificity can often not be demonstrated. Most forensics laboratories use flame ionization detection (FID) for their gas chromatograph. An FID instrument responds to anything carbon-containing that elutes from the column. Often one observes extra so-called “ghost peak” signals present in around the chromatogram near the ethanol peak and the internal standard peak when real samples are analyzed. These may be present or absent in various neat samples, but if a blank fortified matrix sample was never analyzed to show there are no background signals present at the retention times of ethanol and the internal standard, then there is no way to guarantee the accuracy of the result. Small or large coelutions of interferences with ethanol or the internal standard can have a marked and very unpredictable effect on the final calculated result of the measurement. Standard 036 specifies that blank matrix samples from a minimum of ten different sources be evaluated during method validation to establish the specificity, or the lack of interferences, for the method. I think I have seen that level of validation documented in forensics discovery documents only once or twice.

I could continue this discussion with other such examples. However, the point I want to make is that the steps for proper method validation are well spelled out. Many forensics laboratories appear to obtain various accreditations without apparently being able to show they have performed and documented the performance of these specific steps. I see poorly validated analyses from accredited forensics laboratories all the time.

The steps to proper method validation are not difficult measurements to make, but they are critical for establishing the reliability of the method. I do not understand why these deficiencies are so common, and I do not understand why they persist. As a result, I have started a business called Medusa Analytical, LLC (

Forensics chemical measurement methods are generally pretty straightforward and should be reliable. There are well prescribed and straightforward ways to show the method is reliable vis-à-vis Standard 036 guidance. It just seems wrong that measurement results shown deficient in some aspect of method validation should be allowed to stand; especially, if they are called into question based on some aspect of a particular case sample, like the presence of ghost peaks. If the appropriate steps were not taken to ensure specificity of the method, then specificity should be doubted, and the reliability of the result should be doubted.

In the end, it always seems like a game about how much deficiency in the forensics chemical analysis process a court or jury will tolerate. Why should there be any tolerance for deficiency when a person’s livelihood is at stake? In my opinion, the chemical analysis should be one of the most reliable pieces of evidence in the case; it is a shame to see that it often is not, because a forensics laboratory apparently decided to cut some corners.

References

- K.A. Schug, Forensics, Lawyers, and Method Validation—Surprising Knowledge Gaps. The LCGC Blog. June 8, 2015.

http://www.chromatographyonline.com/lcgc-blog-forensics-lawyers-and-method-validation-surprising-knowledge-gaps - ANSI/ASB Standard 036, First Edition 2019. Standard Practices for Method Validation in Forensics Toxicology. Microsoft Word - 036_Std_e1 (asbstandardsboard.org) (Accessed August 16, 2021).

- K.A. Schug, Indisputable Case of Matrix Effects in Blood Alcohol Determinations. The LCGC Blog. Sept. 7, 2016.

http://www.chromatographyonline.com/lcgc-blog-indisputable-case-matrix-effects-blood-alcohol-determinations

Kevin A. Schug is a Full Professor and Shimadzu Distinguished Professor of Analytical Chemistry in the Department of Chemistry & Biochemistry at The University of Texas (UT) at Arlington. He joined the faculty at UT Arlington in 2005 after completing a Ph.D. in Chemistry at Virginia Tech under the direction of Prof. Harold M. McNair and a post-doctoral fellowship at the University of Vienna under Prof. Wolfgang Lindner. Research in the Schug group spans fundamental and applied areas of separation science and mass spectrometry. Schug was named the LCGC Emerging Leader in Chromatography in 2009 and the 2012 American Chemical Society Division of Analytical Chemistry Young Investigator in Separation Science. He is a fellow of both the U.T. Arlington and U.T. System-Wide Academies of Distinguished Teachers.

Newsletter

Join the global community of analytical scientists who trust LCGC for insights on the latest techniques, trends, and expert solutions in chromatography.